|

Ozgur Kara I am a Computer Science PhD student at the University of Illinois Urbana-Champaign (UIUC), where I am advised by Professor James M. Rehg. My research builds the next generation of video AI by tackling three core challenges: efficiency, controllability, and safety. I design computationally efficient models for long-form video editing, develop frameworks that give users precise control over story and appearance, and build safe systems to protect visual media from unauthorized manipulation. I have since completed research internships at Adobe with Tobias Hinz in Summer 2024 (multi-shot video generation), and am currently at Google working with Du Tran (video generation). I am open to opportunities for collaboration and am always interested in discussing new research ideas. Please feel free to contact me via email. 📃 Download my CV. Email / Google Scholar / Github / LinkedIn / Twitter / Some Travels |

Health Care Engineering Systems Center 1206 W Clark St. UIUC Urbana, IL, USA, 61801 |

News— scroll down for more ↓ |

|

|

|

Industry Experience |

||||

|

Publications

|

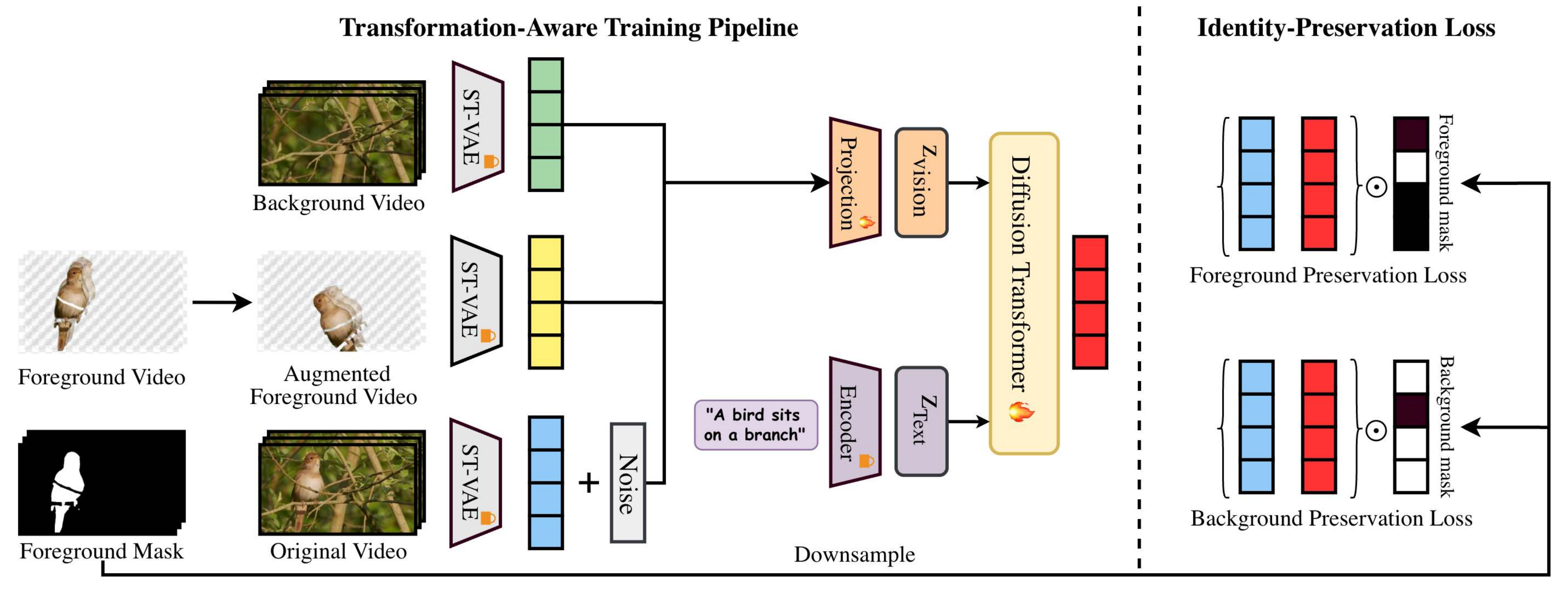

Layer-Aware Video Composition via Split-then-Merge

Ozgur Kara, Yujia Chen, Ming-Hsuan Yang, James M. Rehg, Wen-Sheng Chu, Du Tran In Submission, 2026 Split-then-Merge (StM) is a new video composition framework that improves control and handles data scarcity by splitting unlabeled videos into foreground and background layers, and then self-composing them. Project Webpage / Paper |

|

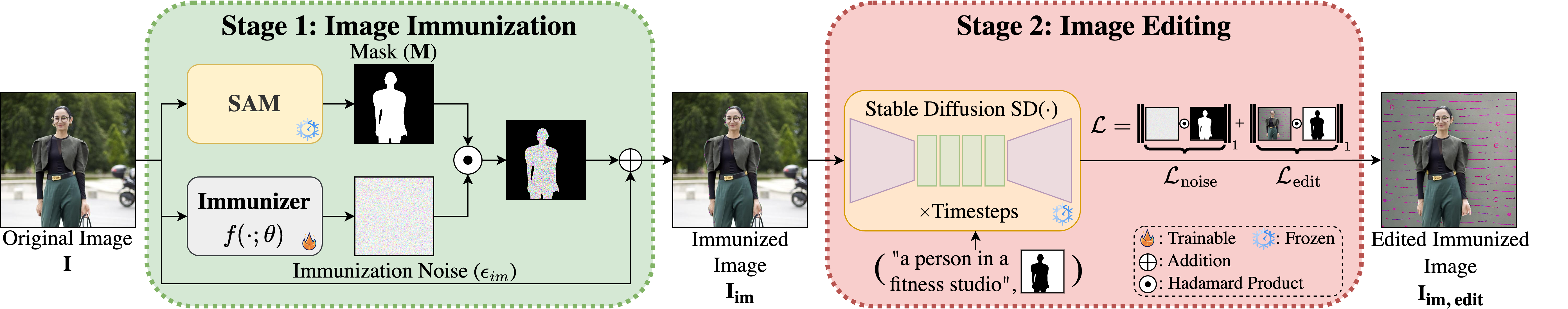

DiffVax: Optimization-Free Image Immunization Against Diffusion-Based Editing

Tarik Can Ozden*, Ozgur Kara*, Oguzhan Akcin, Kerem Zaman, Shashank Srivastava, Sandeep P. Chinchali, James M. Rehg (* denotes equal contribution) The Fourteenth International Conference on Learning Representations (ICLR), 2026 DiffVax is an optimization-free image immunization framework that effectively protects against diffusion-based editing, generalizes to unseen content, is robust against counter-attacks, and shows promise in safeguarding video content. Project Webpage / Paper |

|

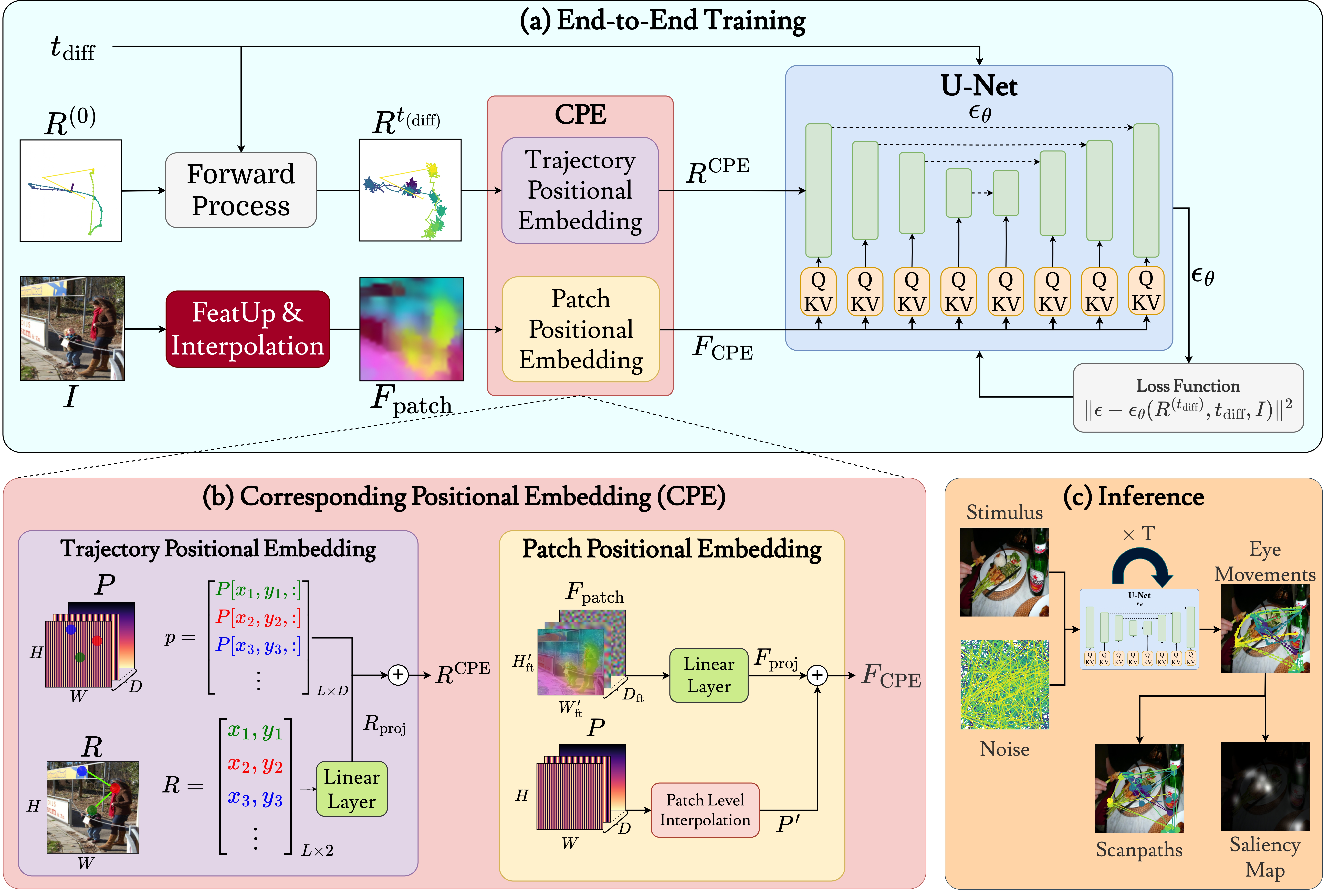

DiffEye: Diffusion-Based Continuous Eye-Tracking Data Generation Conditioned on Natural Images

Ozgur Kara*, Harris Nisar*, James M. Rehg (* denotes equal contribution) The Thirty-ninth Annual Conference on Neural Information Processing Systems (NeurIPS), 2025 We propose DiffEye, a diffusion-based generative model for creating realistic, raw eye-tracking trajectories conditioned on natural images, which outperforms existing methods on scanpath generation tasks. Project Webpage / Paper |

|

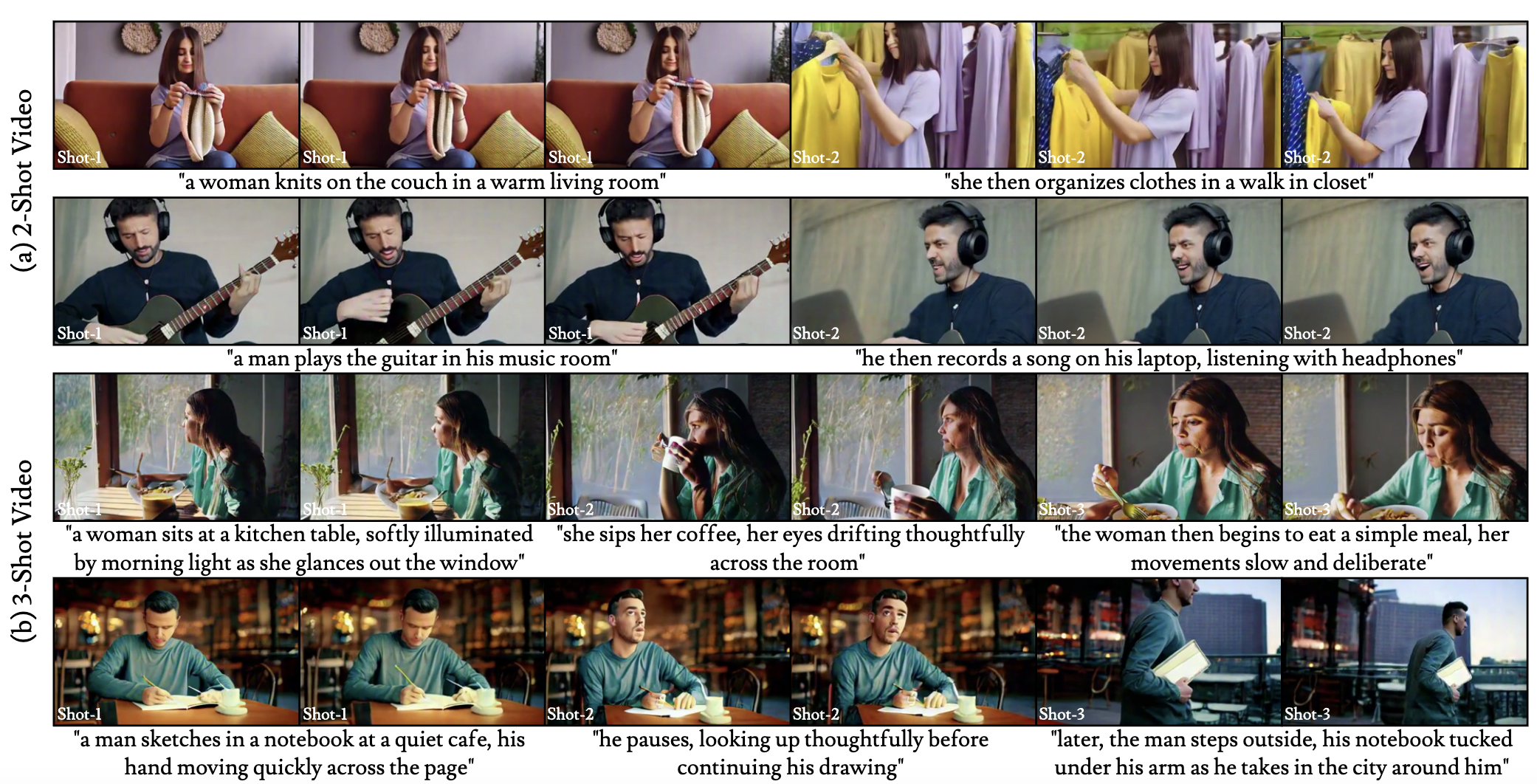

ShotAdapter: Text-to-Multi-Shot Video Generation with Diffusion Models

Ozgur Kara, Krishna Kumar Singh, Feng Liu, Duygu Ceylan, James M. Rehg, Tobias Hinz IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025 ShotAdapter enables text-to-multi-shot video generation with minimal fine-tuning, providing users control over shot number, duration, and content through shot-specific text prompts, along with a multi-shot video dataset collection pipeline. Project Webpage / Paper |

|

RAVE: Randomized Noise Shuffling for Fast and Consistent Video Editing with Diffusion Models

Ozgur Kara, Bariscan Kurtkaya, Hidir Yesiltepe, James M. Rehg, Pinar Yanardag IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 (Highlight) RAVE is a zero-shot, lightweight, and fast framework for text-guided video editing, supporting videos of any length utilizing text-to-image pretrained diffusion models. Project Webpage / Paper / Code / HuggingFace Demo / Video |

|

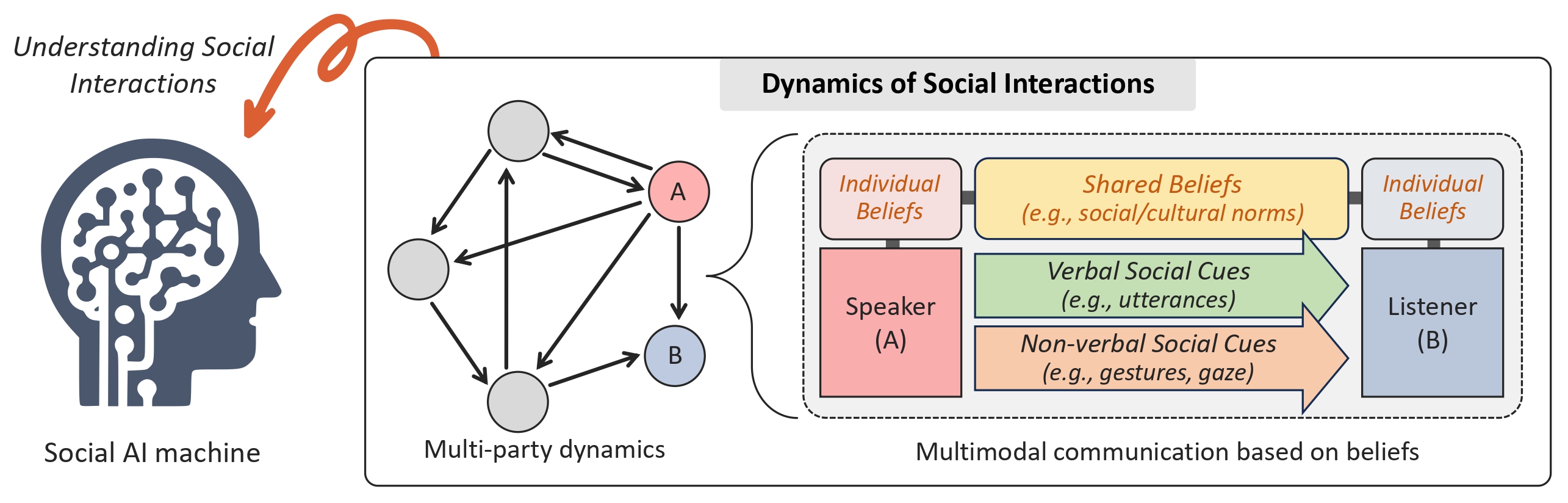

Towards Social AI: A Survey on Understanding Social Interactions

Sangmin Lee, Minzhi Li, Bolin Lai, Wenqi Jia, Fiona Ryan, Xu Cao, Ozgur Kara, Bikram Boote, Weiyan Shi, Diyi Yang, James M. Rehg In Submission, 2025 This is the first survey to provide a comprehensive overview of machine learning studies on social understanding, encompassing both verbal and non-verbal approaches. Paper |

|

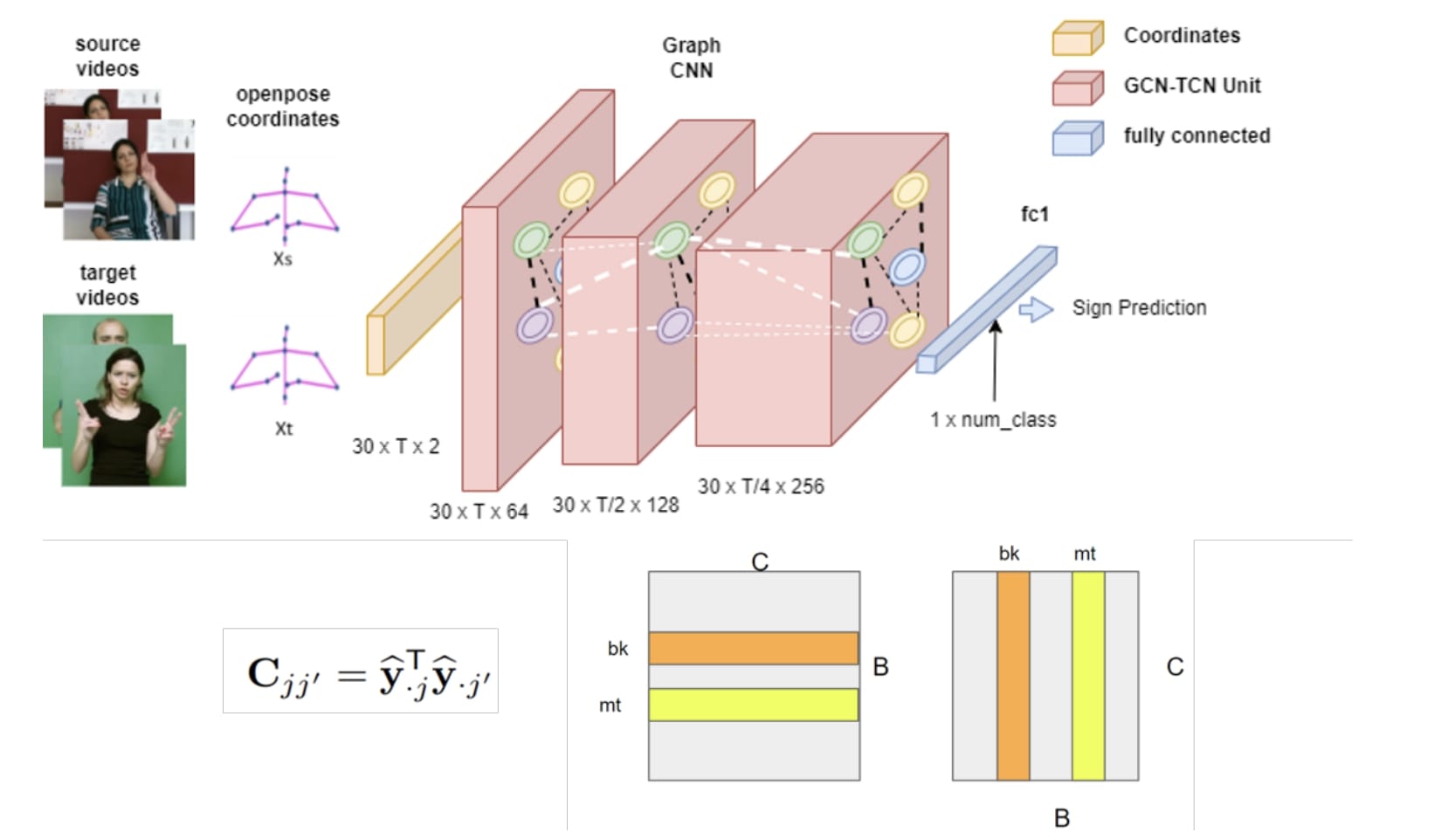

Transfer Learning for Cross-dataset Isolated Sign Language Recognition in Under-Resourced Datasets

Alp Kindiroglu*, Ozgur Kara*, Ogulcan Ozdemir, Lale Akarun (* denotes equal contribution) IEEE International Conference on Automatic Face and Gesture Recognition (FG), 2024 This study provides a publicly available cross-dataset transfer learning benchmark from two existing public Turkish SLR datasets. Paper / Code |

|

|

Leveraging Object Priors for Point Tracking

Bikram Boote, Anh Thai, Wenqi Jia, Ozgur Kara, Stefan Stojanov, James M. Rehg, Sangmin Lee Instance-Level Recognition (ILR) Workshop at European Conference on Computer Vision (ECCV), 2024 (Oral) We propose a novel objectness regularization approach that guides points to be aware of object priors by forcing them to stay inside the the boundaries of object instances. Paper / Code |

|

|

Domain-Incremental Continual Learning for Mitigating Bias in Facial Expression and Action Unit Recognition

Nikhil Churamani, Ozgur Kara, Hatice Gunes IEEE Transactions on Affective Computing, 2022 we propose the novel use of Continual Learning (CL), in particular, using Domain-Incremental Learning (Domain-IL) settings, as a potent bias mitigation method to enhance the fairness of Facial Expression Recognition (FER) systems. Paper / Code |

|

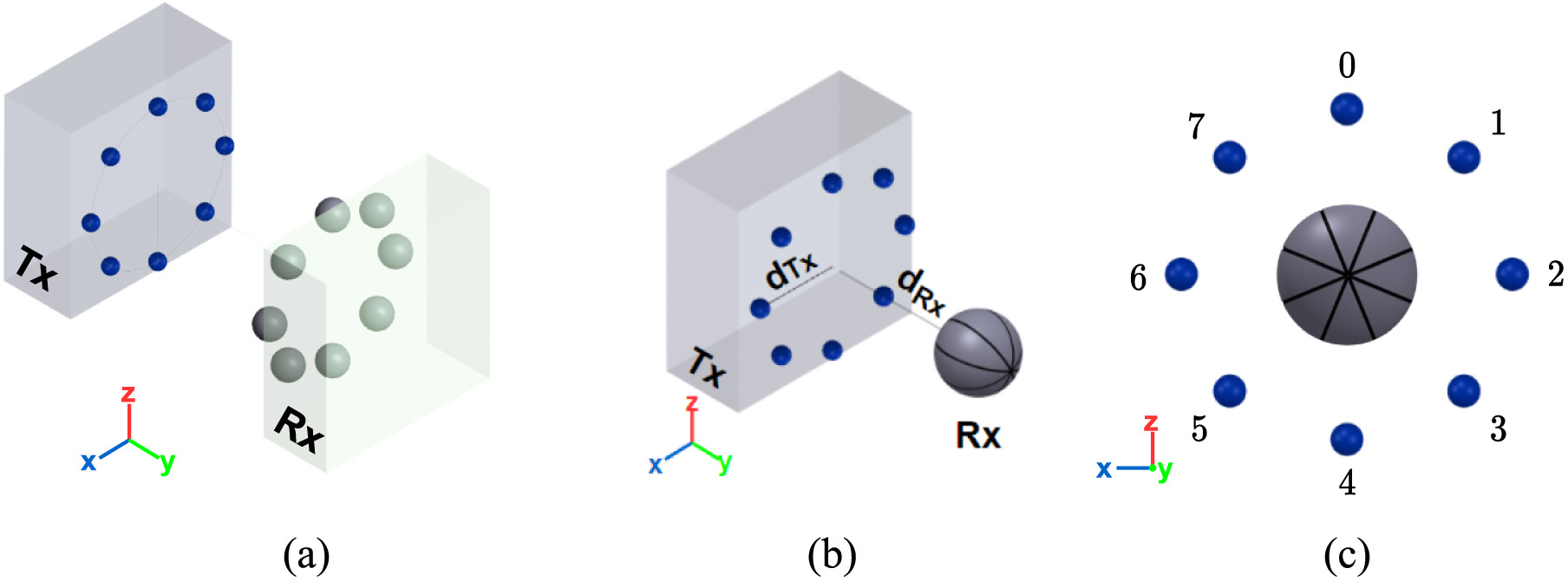

Molecular Index Modulation using Convolutional Neural Networks

Ozgur Kara, Gokberk Yaylali, Ali Emre Pusane, Tuna Tugcu Nano Communication Networks Journal, 2022 We propose a novel convolutional neural network-based architecture for a uniquely designed molecular multiple-input-single-output topology, aimed at mitigating the detrimental effects of molecular interference in nano molecular communication. Paper / Code |

|

|

ISNAS-DIP: Image-Specific Neural Architecture Search for Deep Image Prior

Metin Ersin Arican*, Ozgur Kara*, Gustav Bredell, Ender Konukoglu (* denotes equal contribution) IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022 ISNAS-DIP is an image-specific Neural Architecture Search (NAS) strategy designed for the Deep Image Prior (DIP) framework, offering significantly reduced training requirements compared to conventional NAS methods. Paper / Code / Video |

|

|

Towards Fair Affective Robotics: Continual Learning for Mitigating Bias in Facial Expression and Action Unit Recognition

Ozgur Kara, Nikhil Churamani, Hatice Gunes Workshop on Lifelong Learning and Personalization in Long-Term Human-Robot Interaction (LEAP-HRI), 2021 We propose the novel use of Continual Learning (CL) as a potent bias mitigation method to enhance the fairness of Facial Expression Recognition (FER) systems. Paper / Code |

|

|

Neuroweaver: a platform for designing intelligent closed-loop neuromodulation systems

Parisa Sarikhani, Hao-Lun Hsu, Ozgur Kara, Joon Kyung Kim, Hadi Esmaeilzadeh, Babak Mahmoudi Brain Stimulation: Basic, Translational, and Clinical Research in Neuromodulation, Elsevier, 2021 Our interactive platform enables the design of neuromodulation pipelines through a visually intuitive and user-friendly interface. (Google Summer of Code 2021 project) Paper / Code |

Service & Recognition |

|

|

This website is adapted from this source code. |